From be72c825d2214af7810618fc0fa42f60a3a4f303 Mon Sep 17 00:00:00 2001

From: LarFii <834462287@qq.com>

Date: Wed, 4 Dec 2024 16:01:19 +0800

Subject: [PATCH 1/4] fix entity extract

---

lightrag/operate.py | 4 +++-

1 file changed, 3 insertions(+), 1 deletion(-)

diff --git a/lightrag/operate.py b/lightrag/operate.py

index a40af9e0..09585e50 100644

--- a/lightrag/operate.py

+++ b/lightrag/operate.py

@@ -296,7 +296,9 @@ async def extract_entities(

chunk_key = chunk_key_dp[0]

chunk_dp = chunk_key_dp[1]

content = chunk_dp["content"]

- hint_prompt = entity_extract_prompt.format(**context_base, input_text=content)

+ # hint_prompt = entity_extract_prompt.format(**context_base, input_text=content)

+ hint_prompt = entity_extract_prompt.format(**context_base, input_text="{input_text}").format(**context_base, input_text=content)

+

final_result = await use_llm_func(hint_prompt)

history = pack_user_ass_to_openai_messages(hint_prompt, final_result)

for now_glean_index in range(entity_extract_max_gleaning):

From 44d441a951e259e3f5bffb729cb1dfea6c2cdd8f Mon Sep 17 00:00:00 2001

From: LarFii <834462287@qq.com>

Date: Wed, 4 Dec 2024 19:44:04 +0800

Subject: [PATCH 2/4] update insert custom kg

---

Dockerfile | 56 -------------------------

README.md | 70 ++++++++------------------------

examples/insert_custom_kg.py | 34 ++++++++--------

examples/lightrag_nvidia_demo.py | 59 +++++++++++++++------------

lightrag/__init__.py | 2 +-

lightrag/lightrag.py | 38 ++++++++++++++++-

lightrag/llm.py | 18 +++++---

lightrag/operate.py | 5 ++-

8 files changed, 119 insertions(+), 163 deletions(-)

delete mode 100644 Dockerfile

diff --git a/Dockerfile b/Dockerfile

deleted file mode 100644

index 787816fe..00000000

--- a/Dockerfile

+++ /dev/null

@@ -1,56 +0,0 @@

-FROM debian:bullseye-slim

-ENV JAVA_HOME=/opt/java/openjdk

-COPY --from=eclipse-temurin:17 $JAVA_HOME $JAVA_HOME

-ENV PATH="${JAVA_HOME}/bin:${PATH}" \

- NEO4J_SHA256=7ce97bd9a4348af14df442f00b3dc5085b5983d6f03da643744838c7a1bc8ba7 \

- NEO4J_TARBALL=neo4j-enterprise-5.24.2-unix.tar.gz \

- NEO4J_EDITION=enterprise \

- NEO4J_HOME="/var/lib/neo4j" \

- LANG=C.UTF-8

-ARG NEO4J_URI=https://dist.neo4j.org/neo4j-enterprise-5.24.2-unix.tar.gz

-

-RUN addgroup --gid 7474 --system neo4j && adduser --uid 7474 --system --no-create-home --home "${NEO4J_HOME}" --ingroup neo4j neo4j

-

-COPY ./local-package/* /startup/

-

-RUN apt update \

- && apt-get install -y curl gcc git jq make procps tini wget \

- && curl --fail --silent --show-error --location --remote-name ${NEO4J_URI} \

- && echo "${NEO4J_SHA256} ${NEO4J_TARBALL}" | sha256sum -c --strict --quiet \

- && tar --extract --file ${NEO4J_TARBALL} --directory /var/lib \

- && mv /var/lib/neo4j-* "${NEO4J_HOME}" \

- && rm ${NEO4J_TARBALL} \

- && sed -i 's/Package Type:.*/Package Type: docker bullseye/' $NEO4J_HOME/packaging_info \

- && mv /startup/neo4j-admin-report.sh "${NEO4J_HOME}"/bin/neo4j-admin-report \

- && mv "${NEO4J_HOME}"/data /data \

- && mv "${NEO4J_HOME}"/logs /logs \

- && chown -R neo4j:neo4j /data \

- && chmod -R 777 /data \

- && chown -R neo4j:neo4j /logs \

- && chmod -R 777 /logs \

- && chown -R neo4j:neo4j "${NEO4J_HOME}" \

- && chmod -R 777 "${NEO4J_HOME}" \

- && chmod -R 755 "${NEO4J_HOME}/bin" \

- && ln -s /data "${NEO4J_HOME}"/data \

- && ln -s /logs "${NEO4J_HOME}"/logs \

- && git clone https://github.com/ncopa/su-exec.git \

- && cd su-exec \

- && git checkout 4c3bb42b093f14da70d8ab924b487ccfbb1397af \

- && echo d6c40440609a23483f12eb6295b5191e94baf08298a856bab6e15b10c3b82891 su-exec.c | sha256sum -c \

- && echo 2a87af245eb125aca9305a0b1025525ac80825590800f047419dc57bba36b334 Makefile | sha256sum -c \

- && make \

- && mv /su-exec/su-exec /usr/bin/su-exec \

- && apt-get -y purge --auto-remove curl gcc git make \

- && rm -rf /var/lib/apt/lists/* /su-exec

-

-

-ENV PATH "${NEO4J_HOME}"/bin:$PATH

-

-WORKDIR "${NEO4J_HOME}"

-

-VOLUME /data /logs

-

-EXPOSE 7474 7473 7687

-

-ENTRYPOINT ["tini", "-g", "--", "/startup/docker-entrypoint.sh"]

-CMD ["neo4j"]

diff --git a/README.md b/README.md

index 02ecbe4e..40908c3d 100644

--- a/README.md

+++ b/README.md

@@ -42,9 +42,9 @@ This repository hosts the code of LightRAG. The structure of this code is based

## Algorithm Flowchart

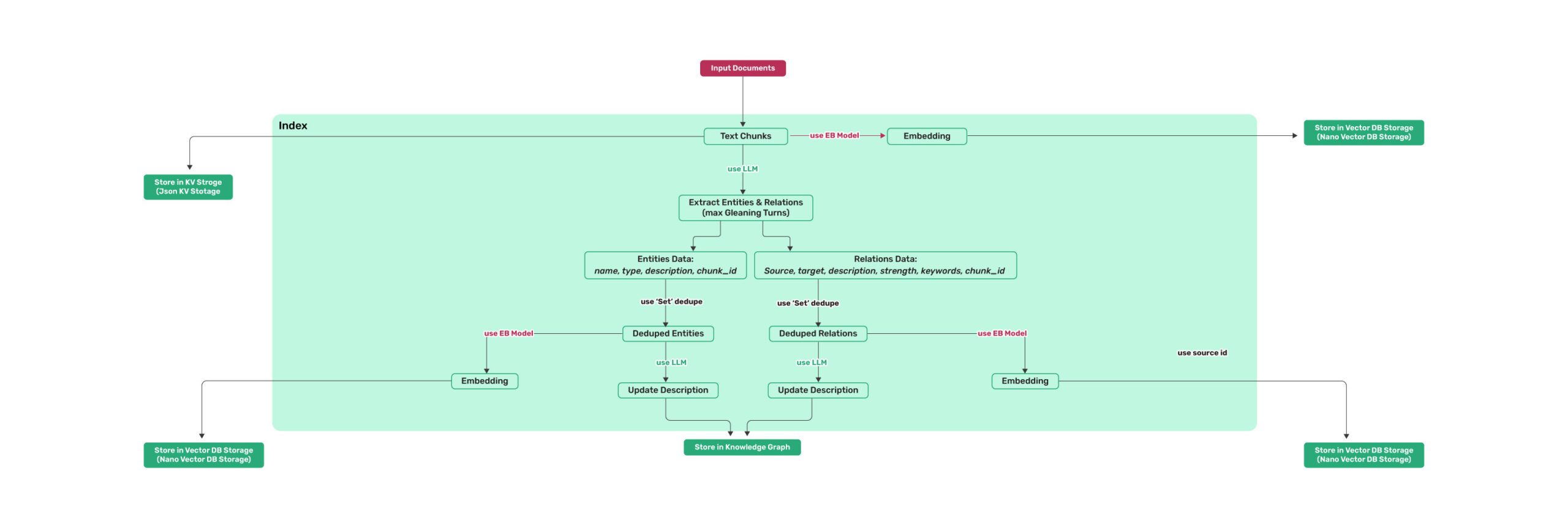

-*Figure 1: LightRAG Indexing Flowchart*

+*Figure 1: LightRAG Indexing Flowchart - Img Caption : [Source](https://learnopencv.com/lightrag/)*

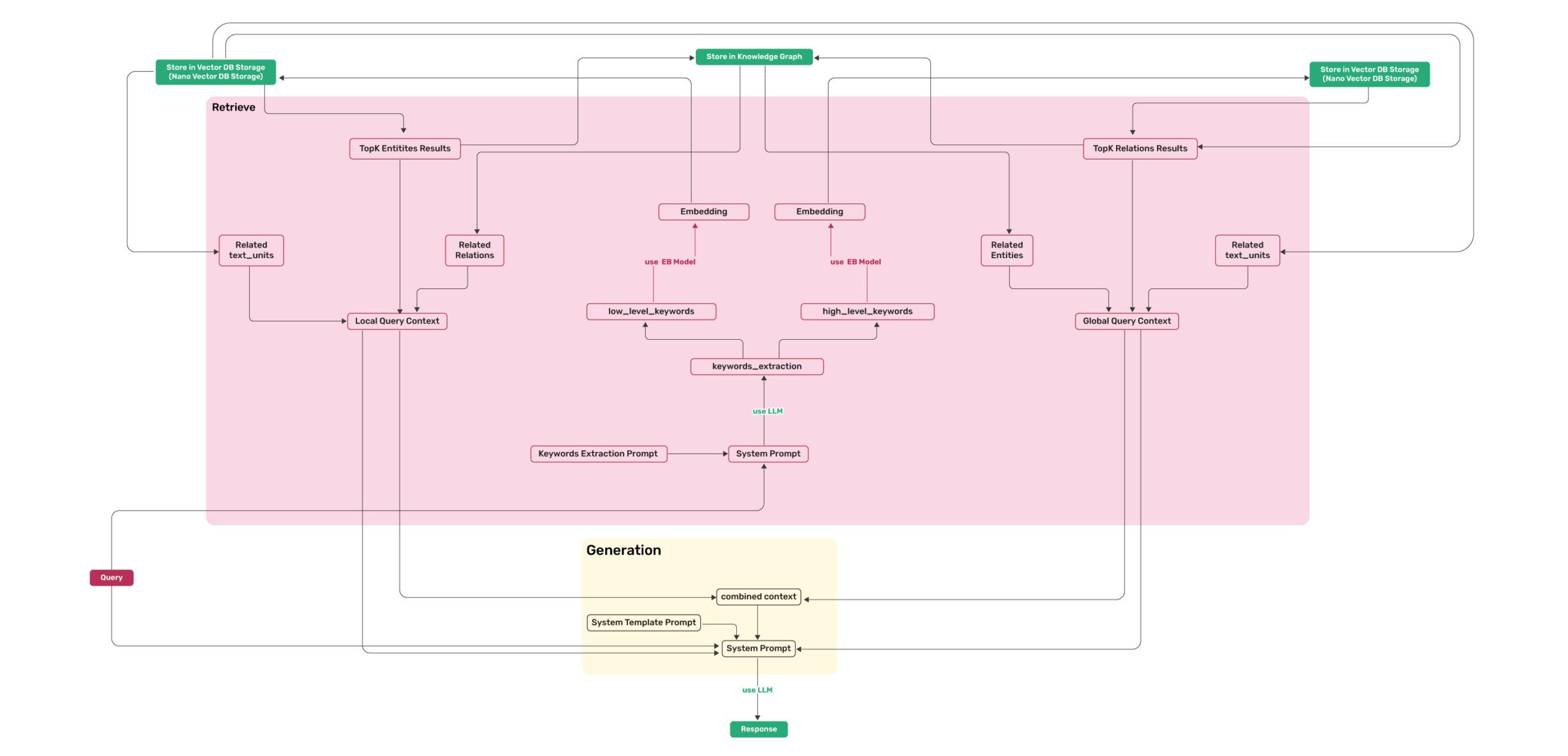

-*Figure 2: LightRAG Retrieval and Querying Flowchart*

+*Figure 2: LightRAG Retrieval and Querying Flowchart - Img Caption : [Source](https://learnopencv.com/lightrag/)*

## Install

@@ -364,7 +364,21 @@ custom_kg = {

"weight": 1.0,

"source_id": "Source1"

}

- ]

+ ],

+ "chunks": [

+ {

+ "content": "ProductX, developed by CompanyA, has revolutionized the market with its cutting-edge features.",

+ "source_id": "Source1",

+ },

+ {

+ "content": "PersonA is a prominent researcher at UniversityB, focusing on artificial intelligence and machine learning.",

+ "source_id": "Source2",

+ },

+ {

+ "content": "None",

+ "source_id": "UNKNOWN",

+ },

+ ],

}

rag.insert_custom_kg(custom_kg)

@@ -947,56 +961,6 @@ def extract_queries(file_path):

```

-## Code Structure

-

-```python

-.

-├── examples

-│ ├── batch_eval.py

-│ ├── generate_query.py

-│ ├── graph_visual_with_html.py

-│ ├── graph_visual_with_neo4j.py

-│ ├── lightrag_api_openai_compatible_demo.py

-│ ├── lightrag_azure_openai_demo.py

-│ ├── lightrag_bedrock_demo.py

-│ ├── lightrag_hf_demo.py

-│ ├── lightrag_lmdeploy_demo.py

-│ ├── lightrag_ollama_demo.py

-│ ├── lightrag_openai_compatible_demo.py

-│ ├── lightrag_openai_demo.py

-│ ├── lightrag_siliconcloud_demo.py

-│ └── vram_management_demo.py

-├── lightrag

-│ ├── kg

-│ │ ├── __init__.py

-│ │ └── neo4j_impl.py

-│ ├── __init__.py

-│ ├── base.py

-│ ├── lightrag.py

-│ ├── llm.py

-│ ├── operate.py

-│ ├── prompt.py

-│ ├── storage.py

-│ └── utils.py

-├── reproduce

-│ ├── Step_0.py

-│ ├── Step_1_openai_compatible.py

-│ ├── Step_1.py

-│ ├── Step_2.py

-│ ├── Step_3_openai_compatible.py

-│ └── Step_3.py

-├── .gitignore

-├── .pre-commit-config.yaml

-├── Dockerfile

-├── get_all_edges_nx.py

-├── LICENSE

-├── README.md

-├── requirements.txt

-├── setup.py

-├── test_neo4j.py

-└── test.py

-```

-

## Star History

diff --git a/examples/insert_custom_kg.py b/examples/insert_custom_kg.py

index 19da0f29..1c02ea25 100644

--- a/examples/insert_custom_kg.py

+++ b/examples/insert_custom_kg.py

@@ -56,18 +56,6 @@ custom_kg = {

"description": "An annual technology conference held in CityC",

"source_id": "Source3",

},

- {

- "entity_name": "CompanyD",

- "entity_type": "Organization",

- "description": "A financial services company specializing in insurance",

- "source_id": "Source4",

- },

- {

- "entity_name": "ServiceZ",

- "entity_type": "Service",

- "description": "An insurance product offered by CompanyD",

- "source_id": "Source4",

- },

],

"relationships": [

{

@@ -94,13 +82,23 @@ custom_kg = {

"weight": 0.8,

"source_id": "Source3",

},

+ ],

+ "chunks": [

{

- "src_id": "CompanyD",

- "tgt_id": "ServiceZ",

- "description": "CompanyD provides ServiceZ",

- "keywords": "provide, offer",

- "weight": 1.0,

- "source_id": "Source4",

+ "content": "ProductX, developed by CompanyA, has revolutionized the market with its cutting-edge features.",

+ "source_id": "Source1",

+ },

+ {

+ "content": "PersonA is a prominent researcher at UniversityB, focusing on artificial intelligence and machine learning.",

+ "source_id": "Source2",

+ },

+ {

+ "content": "EventY, held in CityC, attracts technology enthusiasts and companies from around the globe.",

+ "source_id": "Source3",

+ },

+ {

+ "content": "None",

+ "source_id": "UNKNOWN",

},

],

}

diff --git a/examples/lightrag_nvidia_demo.py b/examples/lightrag_nvidia_demo.py

index 10d43c42..5af562b0 100644

--- a/examples/lightrag_nvidia_demo.py

+++ b/examples/lightrag_nvidia_demo.py

@@ -1,11 +1,14 @@

import os

import asyncio

from lightrag import LightRAG, QueryParam

-from lightrag.llm import openai_complete_if_cache, nvidia_openai_embedding, nvidia_openai_complete

+from lightrag.llm import (

+ openai_complete_if_cache,

+ nvidia_openai_embedding,

+)

from lightrag.utils import EmbeddingFunc

import numpy as np

-#for custom llm_model_func

+# for custom llm_model_func

from lightrag.utils import locate_json_string_body_from_string

WORKING_DIR = "./dickens"

@@ -13,14 +16,15 @@ WORKING_DIR = "./dickens"

if not os.path.exists(WORKING_DIR):

os.mkdir(WORKING_DIR)

-#some method to use your API key (choose one)

+# some method to use your API key (choose one)

# NVIDIA_OPENAI_API_KEY = os.getenv("NVIDIA_OPENAI_API_KEY")

-NVIDIA_OPENAI_API_KEY = "nvapi-xxxx" #your api key

+NVIDIA_OPENAI_API_KEY = "nvapi-xxxx" # your api key

# using pre-defined function for nvidia LLM API. OpenAI compatible

# llm_model_func = nvidia_openai_complete

-#If you trying to make custom llm_model_func to use llm model on NVIDIA API like other example:

+

+# If you trying to make custom llm_model_func to use llm model on NVIDIA API like other example:

async def llm_model_func(

prompt, system_prompt=None, history_messages=[], keyword_extraction=False, **kwargs

) -> str:

@@ -37,36 +41,41 @@ async def llm_model_func(

return locate_json_string_body_from_string(result)

return result

-#custom embedding

+

+# custom embedding

nvidia_embed_model = "nvidia/nv-embedqa-e5-v5"

+

+

async def indexing_embedding_func(texts: list[str]) -> np.ndarray:

return await nvidia_openai_embedding(

texts,

- model = nvidia_embed_model, #maximum 512 token

+ model=nvidia_embed_model, # maximum 512 token

# model="nvidia/llama-3.2-nv-embedqa-1b-v1",

api_key=NVIDIA_OPENAI_API_KEY,

base_url="https://integrate.api.nvidia.com/v1",

- input_type = "passage",

- trunc = "END", #handling on server side if input token is longer than maximum token

- encode = "float"

+ input_type="passage",

+ trunc="END", # handling on server side if input token is longer than maximum token

+ encode="float",

)

+

async def query_embedding_func(texts: list[str]) -> np.ndarray:

return await nvidia_openai_embedding(

texts,

- model = nvidia_embed_model, #maximum 512 token

+ model=nvidia_embed_model, # maximum 512 token

# model="nvidia/llama-3.2-nv-embedqa-1b-v1",

api_key=NVIDIA_OPENAI_API_KEY,

base_url="https://integrate.api.nvidia.com/v1",

- input_type = "query",

- trunc = "END", #handling on server side if input token is longer than maximum token

- encode = "float"

+ input_type="query",

+ trunc="END", # handling on server side if input token is longer than maximum token

+ encode="float",

)

-#dimension are same

+

+# dimension are same

async def get_embedding_dim():

test_text = ["This is a test sentence."]

- embedding = await indexing_embedding_func(test_text)

+ embedding = await indexing_embedding_func(test_text)

embedding_dim = embedding.shape[1]

return embedding_dim

@@ -88,29 +97,29 @@ async def main():

embedding_dimension = await get_embedding_dim()

print(f"Detected embedding dimension: {embedding_dimension}")

- #lightRAG class during indexing

+ # lightRAG class during indexing

rag = LightRAG(

working_dir=WORKING_DIR,

llm_model_func=llm_model_func,

- # llm_model_name="meta/llama3-70b-instruct", #un comment if

+ # llm_model_name="meta/llama3-70b-instruct", #un comment if

embedding_func=EmbeddingFunc(

embedding_dim=embedding_dimension,

- max_token_size=512, #maximum token size, somehow it's still exceed maximum number of token

- #so truncate (trunc) parameter on embedding_func will handle it and try to examine the tokenizer used in LightRAG

- #so you can adjust to be able to fit the NVIDIA model (future work)

+ max_token_size=512, # maximum token size, somehow it's still exceed maximum number of token

+ # so truncate (trunc) parameter on embedding_func will handle it and try to examine the tokenizer used in LightRAG

+ # so you can adjust to be able to fit the NVIDIA model (future work)

func=indexing_embedding_func,

),

)

-

- #reading file

+

+ # reading file

with open("./book.txt", "r", encoding="utf-8") as f:

await rag.ainsert(f.read())

- #redefine rag to change embedding into query type

+ # redefine rag to change embedding into query type

rag = LightRAG(

working_dir=WORKING_DIR,

llm_model_func=llm_model_func,

- # llm_model_name="meta/llama3-70b-instruct", #un comment if

+ # llm_model_name="meta/llama3-70b-instruct", #un comment if

embedding_func=EmbeddingFunc(

embedding_dim=embedding_dimension,

max_token_size=512,

diff --git a/lightrag/__init__.py b/lightrag/__init__.py

index a8b60e55..ea579af2 100644

--- a/lightrag/__init__.py

+++ b/lightrag/__init__.py

@@ -1,5 +1,5 @@

from .lightrag import LightRAG as LightRAG, QueryParam as QueryParam

-__version__ = "1.0.2"

+__version__ = "1.0.3"

__author__ = "Zirui Guo"

__url__ = "https://github.com/HKUDS/LightRAG"

diff --git a/lightrag/lightrag.py b/lightrag/lightrag.py

index 97b2f256..f2f8d07a 100644

--- a/lightrag/lightrag.py

+++ b/lightrag/lightrag.py

@@ -329,13 +329,39 @@ class LightRAG:

async def ainsert_custom_kg(self, custom_kg: dict):

update_storage = False

try:

+ # Insert chunks into vector storage

+ all_chunks_data = {}

+ chunk_to_source_map = {}

+ for chunk_data in custom_kg.get("chunks", []):

+ chunk_content = chunk_data["content"]

+ source_id = chunk_data["source_id"]

+ chunk_id = compute_mdhash_id(chunk_content.strip(), prefix="chunk-")

+

+ chunk_entry = {"content": chunk_content.strip(), "source_id": source_id}

+ all_chunks_data[chunk_id] = chunk_entry

+ chunk_to_source_map[source_id] = chunk_id

+ update_storage = True

+

+ if self.chunks_vdb is not None and all_chunks_data:

+ await self.chunks_vdb.upsert(all_chunks_data)

+ if self.text_chunks is not None and all_chunks_data:

+ await self.text_chunks.upsert(all_chunks_data)

+

# Insert entities into knowledge graph

all_entities_data = []

for entity_data in custom_kg.get("entities", []):

entity_name = f'"{entity_data["entity_name"].upper()}"'

entity_type = entity_data.get("entity_type", "UNKNOWN")

description = entity_data.get("description", "No description provided")

- source_id = entity_data["source_id"]

+ # source_id = entity_data["source_id"]

+ source_chunk_id = entity_data.get("source_id", "UNKNOWN")

+ source_id = chunk_to_source_map.get(source_chunk_id, "UNKNOWN")

+

+ # Log if source_id is UNKNOWN

+ if source_id == "UNKNOWN":

+ logger.warning(

+ f"Entity '{entity_name}' has an UNKNOWN source_id. Please check the source mapping."

+ )

# Prepare node data

node_data = {

@@ -359,7 +385,15 @@ class LightRAG:

description = relationship_data["description"]

keywords = relationship_data["keywords"]

weight = relationship_data.get("weight", 1.0)

- source_id = relationship_data["source_id"]

+ # source_id = relationship_data["source_id"]

+ source_chunk_id = relationship_data.get("source_id", "UNKNOWN")

+ source_id = chunk_to_source_map.get(source_chunk_id, "UNKNOWN")

+

+ # Log if source_id is UNKNOWN

+ if source_id == "UNKNOWN":

+ logger.warning(

+ f"Relationship from '{src_id}' to '{tgt_id}' has an UNKNOWN source_id. Please check the source mapping."

+ )

# Check if nodes exist in the knowledge graph

for need_insert_id in [src_id, tgt_id]:

diff --git a/lightrag/llm.py b/lightrag/llm.py

index e247699b..e670c6ce 100644

--- a/lightrag/llm.py

+++ b/lightrag/llm.py

@@ -502,11 +502,12 @@ async def gpt_4o_mini_complete(

**kwargs,

)

+

async def nvidia_openai_complete(

prompt, system_prompt=None, history_messages=[], keyword_extraction=False, **kwargs

) -> str:

result = await openai_complete_if_cache(

- "nvidia/llama-3.1-nemotron-70b-instruct", #context length 128k

+ "nvidia/llama-3.1-nemotron-70b-instruct", # context length 128k

prompt,

system_prompt=system_prompt,

history_messages=history_messages,

@@ -517,6 +518,7 @@ async def nvidia_openai_complete(

return locate_json_string_body_from_string(result)

return result

+

async def azure_openai_complete(

prompt, system_prompt=None, history_messages=[], keyword_extraction=False, **kwargs

) -> str:

@@ -610,12 +612,12 @@ async def openai_embedding(

)

async def nvidia_openai_embedding(

texts: list[str],

- model: str = "nvidia/llama-3.2-nv-embedqa-1b-v1", #refer to https://build.nvidia.com/nim?filters=usecase%3Ausecase_text_to_embedding

+ model: str = "nvidia/llama-3.2-nv-embedqa-1b-v1", # refer to https://build.nvidia.com/nim?filters=usecase%3Ausecase_text_to_embedding

base_url: str = "https://integrate.api.nvidia.com/v1",

api_key: str = None,

- input_type: str = "passage", #query for retrieval, passage for embedding

- trunc: str = "NONE", #NONE or START or END

- encode: str = "float" #float or base64

+ input_type: str = "passage", # query for retrieval, passage for embedding

+ trunc: str = "NONE", # NONE or START or END

+ encode: str = "float", # float or base64

) -> np.ndarray:

if api_key:

os.environ["OPENAI_API_KEY"] = api_key

@@ -624,10 +626,14 @@ async def nvidia_openai_embedding(

AsyncOpenAI() if base_url is None else AsyncOpenAI(base_url=base_url)

)

response = await openai_async_client.embeddings.create(

- model=model, input=texts, encoding_format=encode, extra_body={"input_type": input_type, "truncate": trunc}

+ model=model,

+ input=texts,

+ encoding_format=encode,

+ extra_body={"input_type": input_type, "truncate": trunc},

)

return np.array([dp.embedding for dp in response.data])

+

@wrap_embedding_func_with_attrs(embedding_dim=1536, max_token_size=8191)

@retry(

stop=stop_after_attempt(3),

diff --git a/lightrag/operate.py b/lightrag/operate.py

index 09585e50..5f653d27 100644

--- a/lightrag/operate.py

+++ b/lightrag/operate.py

@@ -297,7 +297,9 @@ async def extract_entities(

chunk_dp = chunk_key_dp[1]

content = chunk_dp["content"]

# hint_prompt = entity_extract_prompt.format(**context_base, input_text=content)

- hint_prompt = entity_extract_prompt.format(**context_base, input_text="{input_text}").format(**context_base, input_text=content)

+ hint_prompt = entity_extract_prompt.format(

+ **context_base, input_text="{input_text}"

+ ).format(**context_base, input_text=content)

final_result = await use_llm_func(hint_prompt)

history = pack_user_ass_to_openai_messages(hint_prompt, final_result)

@@ -949,7 +951,6 @@ async def _find_related_text_unit_from_relationships(

split_string_by_multi_markers(dp["source_id"], [GRAPH_FIELD_SEP])

for dp in edge_datas

]

-

all_text_units_lookup = {}

for index, unit_list in enumerate(text_units):

From 1fdaf40b6e2381ad272c47299b1cd3fec32b9e9a Mon Sep 17 00:00:00 2001

From: LarFii <834462287@qq.com>

Date: Wed, 4 Dec 2024 19:58:16 +0800

Subject: [PATCH 3/4] Update Code Structure

---

README.md | 58 +++++++++++++++++++++++++++++++++++++++++++++++++++++++

1 file changed, 58 insertions(+)

diff --git a/README.md b/README.md

index 40908c3d..457554f6 100644

--- a/README.md

+++ b/README.md

@@ -961,6 +961,64 @@ def extract_queries(file_path):

```

+## Code Structure

+

+```python

+.

+├── .github

+│ ├── workflows

+│ │ └── linting.yaml

+├── examples/

+│ ├── batch_eval.py

+│ ├── generate_query.py

+│ ├── graph_visual_with_html.py

+│ ├── graph_visual_with_neo4j.py

+│ ├── insert_custom_kg.py

+│ ├── lightrag_api_openai_compatible_demo.py

+│ ├── lightrag_api_oracle_demo..py

+│ ├── lightrag_azure_openai_demo.py

+│ ├── lightrag_bedrock_demo.py

+│ ├── lightrag_hf_demo.py

+│ ├── lightrag_lmdeploy_demo.py

+│ ├── lightrag_nvidia_demo.py

+│ ├── lightrag_ollama_demo.py

+│ ├── lightrag_openai_compatible_demo.py

+│ ├── lightrag_openai_demo.py

+│ ├── lightrag_oracle_demo.py

+│ ├── lightrag_siliconcloud_demo.py

+│ └── vram_management_demo.py

+├── lightrag

+│ ├── kg

+│ │ ├── __init__.py

+│ │ ├── oracle_impl.py

+│ │ └── neo4j_impl.py

+│ ├── __init__.py

+│ ├── base.py

+│ ├── lightrag.py

+│ ├── llm.py

+│ ├── operate.py

+│ ├── prompt.py

+│ ├── storage.py

+│ └── utils.py

+├── reproduce

+│ ├── Step_0.py

+│ ├── Step_1_openai_compatible.py

+│ ├── Step_1.py

+│ ├── Step_2.py

+│ ├── Step_3_openai_compatible.py

+│ └── Step_3.py

+├── .gitignore

+├── .pre-commit-config.yaml

+├── get_all_edges_nx.py

+├── LICENSE

+├── README.md

+├── requirements.txt

+├── setup.py

+├── test_neo4j.py

+└── test.py

+```

+

+

## Star History

From dff3fe18e9f87a5bee329f2699898b17011f507c Mon Sep 17 00:00:00 2001

From: LarFii <834462287@qq.com>

Date: Wed, 4 Dec 2024 19:59:50 +0800

Subject: [PATCH 4/4] Update Code Structure

---

README.md | 10 +++++-----

1 file changed, 5 insertions(+), 5 deletions(-)

diff --git a/README.md b/README.md

index 457554f6..4b483e1b 100644

--- a/README.md

+++ b/README.md

@@ -965,8 +965,8 @@ def extract_queries(file_path):

```python

.

-├── .github

-│ ├── workflows

+├── .github/

+│ ├── workflows/

│ │ └── linting.yaml

├── examples/

│ ├── batch_eval.py

@@ -987,8 +987,8 @@ def extract_queries(file_path):

│ ├── lightrag_oracle_demo.py

│ ├── lightrag_siliconcloud_demo.py

│ └── vram_management_demo.py

-├── lightrag

-│ ├── kg

+├── lightrag/

+│ ├── kg/

│ │ ├── __init__.py

│ │ ├── oracle_impl.py

│ │ └── neo4j_impl.py

@@ -1000,7 +1000,7 @@ def extract_queries(file_path):

│ ├── prompt.py

│ ├── storage.py

│ └── utils.py

-├── reproduce

+├── reproduce/

│ ├── Step_0.py

│ ├── Step_1_openai_compatible.py

│ ├── Step_1.py