+

+

+

-This repository hosts the code of LightRAG. The structure of this code is based on [nano-graphrag](https://github.com/gusye1234/nano-graphrag).

-

+

## 🎉 News

@@ -36,18 +46,14 @@ This repository hosts the code of LightRAG. The structure of this code is based

- [x] [2024.11.09]🎯📢Introducing the [LightRAG Gui](https://lightrag-gui.streamlit.app), which allows you to insert, query, visualize, and download LightRAG knowledge.

- [x] [2024.11.04]🎯📢You can now [use Neo4J for Storage](https://github.com/HKUDS/LightRAG?tab=readme-ov-file#using-neo4j-for-storage).

- [x] [2024.10.29]🎯📢LightRAG now supports multiple file types, including PDF, DOC, PPT, and CSV via `textract`.

-- [x] [2024.10.20]🎯📢We’ve added a new feature to LightRAG: Graph Visualization.

-- [x] [2024.10.18]🎯📢We’ve added a link to a [LightRAG Introduction Video](https://youtu.be/oageL-1I0GE). Thanks to the author!

+- [x] [2024.10.20]🎯📢We've added a new feature to LightRAG: Graph Visualization.

+- [x] [2024.10.18]🎯📢We've added a link to a [LightRAG Introduction Video](https://youtu.be/oageL-1I0GE). Thanks to the author!

- [x] [2024.10.17]🎯📢We have created a [Discord channel](https://discord.gg/yF2MmDJyGJ)! Welcome to join for sharing and discussions! 🎉🎉

- [x] [2024.10.16]🎯📢LightRAG now supports [Ollama models](https://github.com/HKUDS/LightRAG?tab=readme-ov-file#quick-start)!

- [x] [2024.10.15]🎯📢LightRAG now supports [Hugging Face models](https://github.com/HKUDS/LightRAG?tab=readme-ov-file#quick-start)!

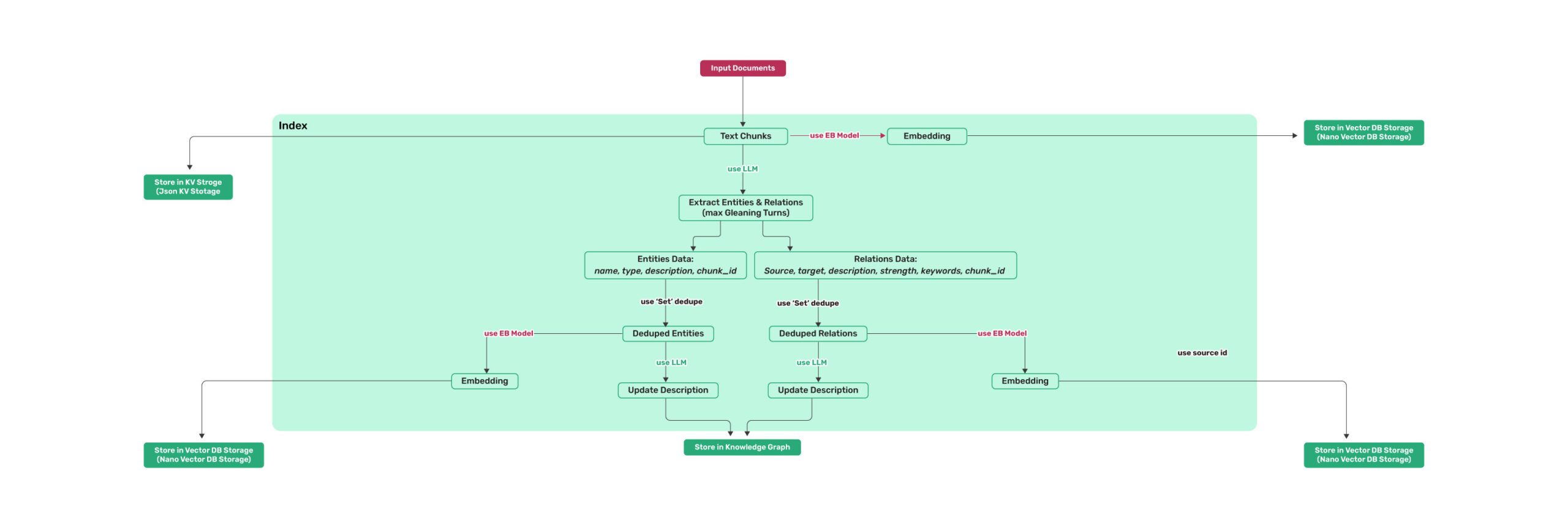

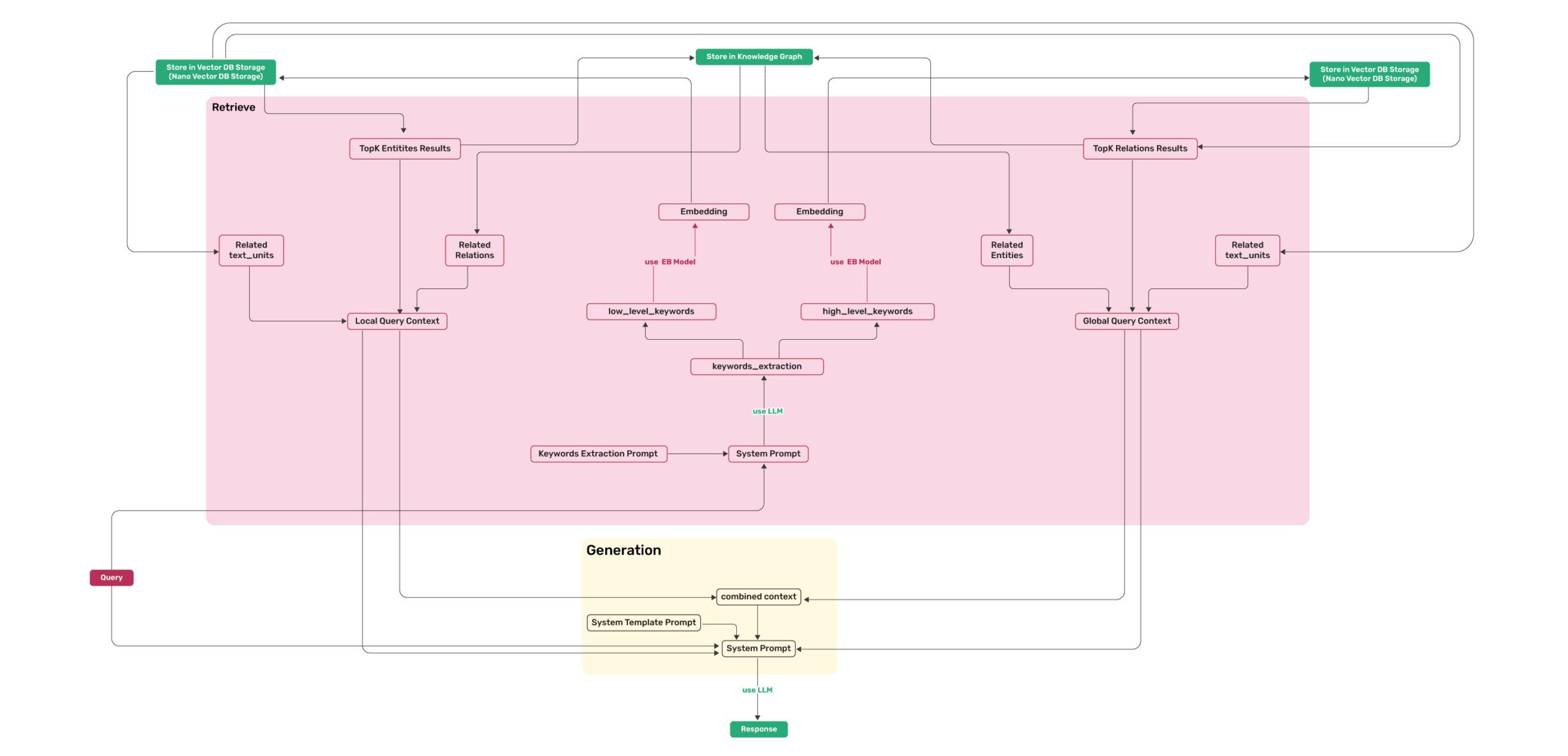

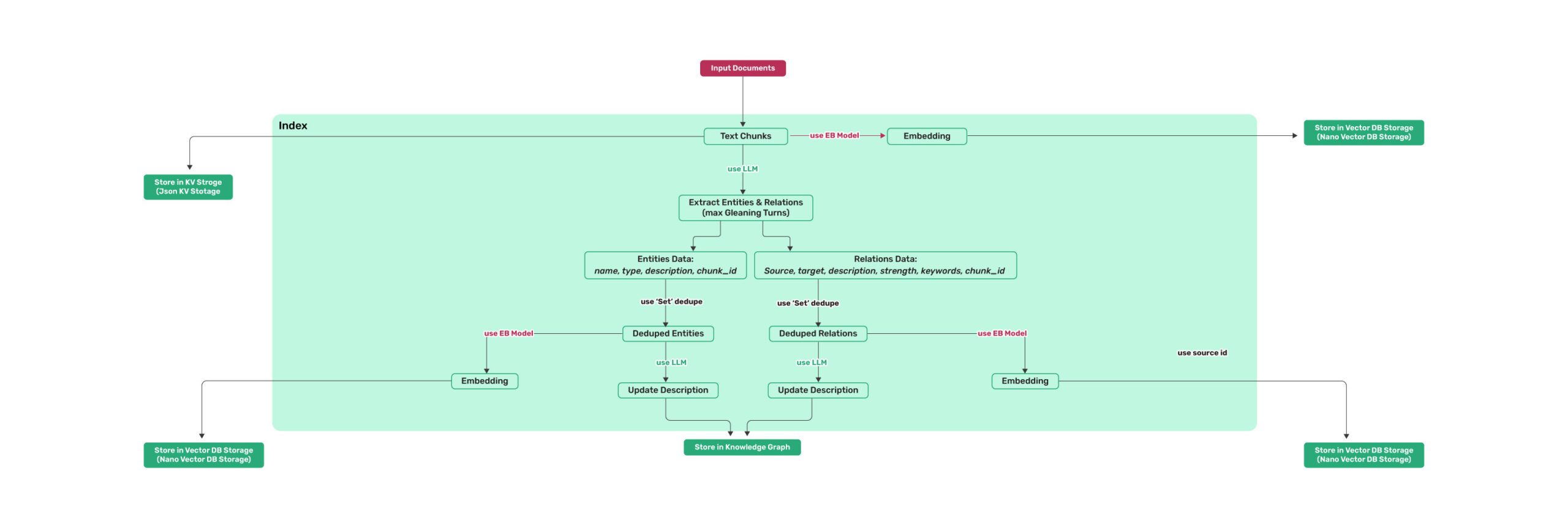

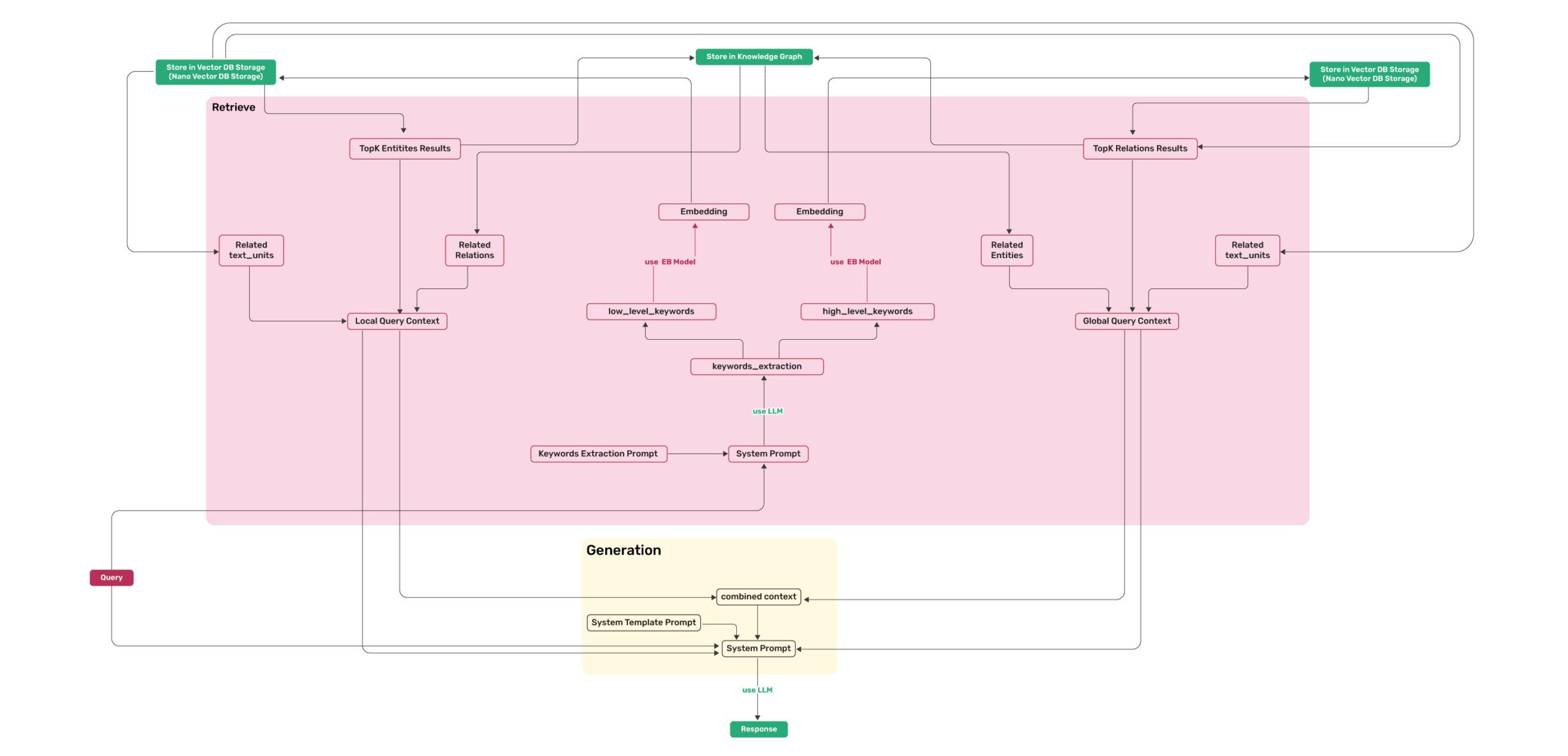

## Algorithm Flowchart

-

-*Figure 1: LightRAG Indexing Flowchart - Img Caption : [Source](https://learnopencv.com/lightrag/)*

-

-*Figure 2: LightRAG Retrieval and Querying Flowchart - Img Caption : [Source](https://learnopencv.com/lightrag/)*

## Install

@@ -75,7 +81,7 @@ Use the below Python snippet (in a script) to initialize LightRAG and perform qu

```python

import os

from lightrag import LightRAG, QueryParam

-from lightrag.llm import gpt_4o_mini_complete, gpt_4o_complete

+from lightrag.llm.openai import gpt_4o_mini_complete, gpt_4o_complete

#########

# Uncomment the below two lines if running in a jupyter notebook to handle the async nature of rag.insert()

@@ -171,7 +177,7 @@ async def llm_model_func(

)

async def embedding_func(texts: list[str]) -> np.ndarray:

- return await openai_embedding(

+ return await openai_embed(

texts,

model="solar-embedding-1-large-query",

api_key=os.getenv("UPSTAGE_API_KEY"),

@@ -227,7 +233,7 @@ If you want to use Ollama models, you need to pull model you plan to use and emb

Then you only need to set LightRAG as follows:

```python

-from lightrag.llm import ollama_model_complete, ollama_embedding

+from lightrag.llm.ollama import ollama_model_complete, ollama_embed

from lightrag.utils import EmbeddingFunc

# Initialize LightRAG with Ollama model

@@ -239,7 +245,7 @@ rag = LightRAG(

embedding_func=EmbeddingFunc(

embedding_dim=768,

max_token_size=8192,

- func=lambda texts: ollama_embedding(

+ func=lambda texts: ollama_embed(

texts,

embed_model="nomic-embed-text"

)

@@ -684,7 +690,7 @@ if __name__ == "__main__":

| **entity\_summary\_to\_max\_tokens** | `int` | Maximum token size for each entity summary | `500` |

| **node\_embedding\_algorithm** | `str` | Algorithm for node embedding (currently not used) | `node2vec` |

| **node2vec\_params** | `dict` | Parameters for node embedding | `{"dimensions": 1536,"num_walks": 10,"walk_length": 40,"window_size": 2,"iterations": 3,"random_seed": 3,}` |

-| **embedding\_func** | `EmbeddingFunc` | Function to generate embedding vectors from text | `openai_embedding` |

+| **embedding\_func** | `EmbeddingFunc` | Function to generate embedding vectors from text | `openai_embed` |

| **embedding\_batch\_num** | `int` | Maximum batch size for embedding processes (multiple texts sent per batch) | `32` |

| **embedding\_func\_max\_async** | `int` | Maximum number of concurrent asynchronous embedding processes | `16` |

| **llm\_model\_func** | `callable` | Function for LLM generation | `gpt_4o_mini_complete` |

diff --git a/config.ini b/config.ini

deleted file mode 100644

index eb1d4e88..00000000

--- a/config.ini

+++ /dev/null

@@ -1,13 +0,0 @@

-[redis]

-uri = redis://localhost:6379

-

-[neo4j]

-uri = #

-username = neo4j

-password = 12345678

-

-[milvus]

-uri = #

-user = root

-password = Milvus

-db_name = lightrag

diff --git a/docs/Algorithm.md b/docs/Algorithm.md

new file mode 100644

index 00000000..992eed06

--- /dev/null

+++ b/docs/Algorithm.md

@@ -0,0 +1,4 @@

+

+*Figure 1: LightRAG Indexing Flowchart - Img Caption : [Source](https://learnopencv.com/lightrag/)*

+

+*Figure 2: LightRAG Retrieval and Querying Flowchart - Img Caption : [Source](https://learnopencv.com/lightrag/)*

diff --git a/examples/insert_custom_kg.py b/examples/insert_custom_kg.py

index 1c02ea25..50ad925e 100644

--- a/examples/insert_custom_kg.py

+++ b/examples/insert_custom_kg.py

@@ -1,6 +1,6 @@

import os

from lightrag import LightRAG

-from lightrag.llm import gpt_4o_mini_complete

+from lightrag.llm.openai import gpt_4o_mini_complete

#########

# Uncomment the below two lines if running in a jupyter notebook to handle the async nature of rag.insert()

# import nest_asyncio

diff --git a/examples/lightrag_api_ollama_demo.py b/examples/lightrag_api_ollama_demo.py

index 36df1262..634264d3 100644

--- a/examples/lightrag_api_ollama_demo.py

+++ b/examples/lightrag_api_ollama_demo.py

@@ -2,7 +2,7 @@ from fastapi import FastAPI, HTTPException, File, UploadFile

from pydantic import BaseModel

import os

from lightrag import LightRAG, QueryParam

-from lightrag.llm import ollama_embedding, ollama_model_complete

+from lightrag.llm.ollama import ollama_embed, ollama_model_complete

from lightrag.utils import EmbeddingFunc

from typing import Optional

import asyncio

@@ -38,7 +38,7 @@ rag = LightRAG(

embedding_func=EmbeddingFunc(

embedding_dim=768,

max_token_size=8192,

- func=lambda texts: ollama_embedding(

+ func=lambda texts: ollama_embed(

texts, embed_model="nomic-embed-text", host="http://localhost:11434"

),

),

diff --git a/examples/lightrag_api_open_webui_demo.py b/examples/lightrag_api_open_webui_demo.py

index 17e1817e..88454da8 100644

--- a/examples/lightrag_api_open_webui_demo.py

+++ b/examples/lightrag_api_open_webui_demo.py

@@ -9,7 +9,7 @@ from typing import Optional

import os

import logging

from lightrag import LightRAG, QueryParam

-from lightrag.llm import ollama_model_complete, ollama_embed

+from lightrag.llm.ollama import ollama_model_complete, ollama_embed

from lightrag.utils import EmbeddingFunc

import nest_asyncio

diff --git a/examples/lightrag_api_openai_compatible_demo.py b/examples/lightrag_api_openai_compatible_demo.py

index 1749753b..8173dc5b 100644

--- a/examples/lightrag_api_openai_compatible_demo.py

+++ b/examples/lightrag_api_openai_compatible_demo.py

@@ -2,7 +2,7 @@ from fastapi import FastAPI, HTTPException, File, UploadFile

from pydantic import BaseModel

import os

from lightrag import LightRAG, QueryParam

-from lightrag.llm import openai_complete_if_cache, openai_embedding

+from lightrag.llm.openai import openai_complete_if_cache, openai_embed

from lightrag.utils import EmbeddingFunc

import numpy as np

from typing import Optional

@@ -48,7 +48,7 @@ async def llm_model_func(

async def embedding_func(texts: list[str]) -> np.ndarray:

- return await openai_embedding(

+ return await openai_embed(

texts,

model=EMBEDDING_MODEL,

)

diff --git a/examples/lightrag_api_oracle_demo.py b/examples/lightrag_api_oracle_demo.py

index 65ac4ddd..602ca900 100644

--- a/examples/lightrag_api_oracle_demo.py

+++ b/examples/lightrag_api_oracle_demo.py

@@ -13,7 +13,7 @@ from pathlib import Path

import asyncio

import nest_asyncio

from lightrag import LightRAG, QueryParam

-from lightrag.llm import openai_complete_if_cache, openai_embedding

+from lightrag.llm.openai import openai_complete_if_cache, openai_embed

from lightrag.utils import EmbeddingFunc

import numpy as np

@@ -64,7 +64,7 @@ async def llm_model_func(

async def embedding_func(texts: list[str]) -> np.ndarray:

- return await openai_embedding(

+ return await openai_embed(

texts,

model=EMBEDDING_MODEL,

api_key=APIKEY,

diff --git a/examples/lightrag_bedrock_demo.py b/examples/lightrag_bedrock_demo.py

index 7e18ea57..6bb6c7d4 100644

--- a/examples/lightrag_bedrock_demo.py

+++ b/examples/lightrag_bedrock_demo.py

@@ -6,7 +6,7 @@ import os

import logging

from lightrag import LightRAG, QueryParam

-from lightrag.llm import bedrock_complete, bedrock_embedding

+from lightrag.llm.bedrock import bedrock_complete, bedrock_embed

from lightrag.utils import EmbeddingFunc

logging.getLogger("aiobotocore").setLevel(logging.WARNING)

@@ -20,7 +20,7 @@ rag = LightRAG(

llm_model_func=bedrock_complete,

llm_model_name="Anthropic Claude 3 Haiku // Amazon Bedrock",

embedding_func=EmbeddingFunc(

- embedding_dim=1024, max_token_size=8192, func=bedrock_embedding

+ embedding_dim=1024, max_token_size=8192, func=bedrock_embed

),

)

diff --git a/examples/lightrag_hf_demo.py b/examples/lightrag_hf_demo.py

index 91033e50..a5088e54 100644

--- a/examples/lightrag_hf_demo.py

+++ b/examples/lightrag_hf_demo.py

@@ -1,7 +1,7 @@

import os

from lightrag import LightRAG, QueryParam

-from lightrag.llm import hf_model_complete, hf_embedding

+from lightrag.llm.hf import hf_model_complete, hf_embed

from lightrag.utils import EmbeddingFunc

from transformers import AutoModel, AutoTokenizer

@@ -17,7 +17,7 @@ rag = LightRAG(

embedding_func=EmbeddingFunc(

embedding_dim=384,

max_token_size=5000,

- func=lambda texts: hf_embedding(

+ func=lambda texts: hf_embed(

texts,

tokenizer=AutoTokenizer.from_pretrained(

"sentence-transformers/all-MiniLM-L6-v2"

diff --git a/examples/lightrag_jinaai_demo.py b/examples/lightrag_jinaai_demo.py

index 4daead75..0378b61b 100644

--- a/examples/lightrag_jinaai_demo.py

+++ b/examples/lightrag_jinaai_demo.py

@@ -1,13 +1,14 @@

import numpy as np

from lightrag import LightRAG, QueryParam

from lightrag.utils import EmbeddingFunc

-from lightrag.llm import jina_embedding, openai_complete_if_cache

+from lightrag.llm.jina import jina_embed

+from lightrag.llm.openai import openai_complete_if_cache

import os

import asyncio

async def embedding_func(texts: list[str]) -> np.ndarray:

- return await jina_embedding(texts, api_key="YourJinaAPIKey")

+ return await jina_embed(texts, api_key="YourJinaAPIKey")

WORKING_DIR = "./dickens"

diff --git a/examples/lightrag_lmdeploy_demo.py b/examples/lightrag_lmdeploy_demo.py

index c0ee86cb..d12eb564 100644

--- a/examples/lightrag_lmdeploy_demo.py

+++ b/examples/lightrag_lmdeploy_demo.py

@@ -1,7 +1,8 @@

import os

from lightrag import LightRAG, QueryParam

-from lightrag.llm import lmdeploy_model_if_cache, hf_embedding

+from lightrag.llm.lmdeploy import lmdeploy_model_if_cache

+from lightrag.llm.hf import hf_embed

from lightrag.utils import EmbeddingFunc

from transformers import AutoModel, AutoTokenizer

@@ -42,7 +43,7 @@ rag = LightRAG(

embedding_func=EmbeddingFunc(

embedding_dim=384,

max_token_size=5000,

- func=lambda texts: hf_embedding(

+ func=lambda texts: hf_embed(

texts,

tokenizer=AutoTokenizer.from_pretrained(

"sentence-transformers/all-MiniLM-L6-v2"

diff --git a/examples/lightrag_nvidia_demo.py b/examples/lightrag_nvidia_demo.py

index 5af562b0..da4b46ff 100644

--- a/examples/lightrag_nvidia_demo.py

+++ b/examples/lightrag_nvidia_demo.py

@@ -3,7 +3,7 @@ import asyncio

from lightrag import LightRAG, QueryParam

from lightrag.llm import (

openai_complete_if_cache,

- nvidia_openai_embedding,

+ nvidia_openai_embed,

)

from lightrag.utils import EmbeddingFunc

import numpy as np

@@ -47,7 +47,7 @@ nvidia_embed_model = "nvidia/nv-embedqa-e5-v5"

async def indexing_embedding_func(texts: list[str]) -> np.ndarray:

- return await nvidia_openai_embedding(

+ return await nvidia_openai_embed(

texts,

model=nvidia_embed_model, # maximum 512 token

# model="nvidia/llama-3.2-nv-embedqa-1b-v1",

@@ -60,7 +60,7 @@ async def indexing_embedding_func(texts: list[str]) -> np.ndarray:

async def query_embedding_func(texts: list[str]) -> np.ndarray:

- return await nvidia_openai_embedding(

+ return await nvidia_openai_embed(

texts,

model=nvidia_embed_model, # maximum 512 token

# model="nvidia/llama-3.2-nv-embedqa-1b-v1",

diff --git a/examples/lightrag_ollama_age_demo.py b/examples/lightrag_ollama_age_demo.py

index 403843a7..d394ded4 100644

--- a/examples/lightrag_ollama_age_demo.py

+++ b/examples/lightrag_ollama_age_demo.py

@@ -4,7 +4,7 @@ import logging

import os

from lightrag import LightRAG, QueryParam

-from lightrag.llm import ollama_embedding, ollama_model_complete

+from lightrag.llm.ollama import ollama_embed, ollama_model_complete

from lightrag.utils import EmbeddingFunc

WORKING_DIR = "./dickens_age"

@@ -32,7 +32,7 @@ rag = LightRAG(

embedding_func=EmbeddingFunc(

embedding_dim=768,

max_token_size=8192,

- func=lambda texts: ollama_embedding(

+ func=lambda texts: ollama_embed(

texts, embed_model="nomic-embed-text", host="http://localhost:11434"

),

),

diff --git a/examples/lightrag_ollama_demo.py b/examples/lightrag_ollama_demo.py

index 162900c4..95856fa2 100644

--- a/examples/lightrag_ollama_demo.py

+++ b/examples/lightrag_ollama_demo.py

@@ -3,7 +3,7 @@ import os

import inspect

import logging

from lightrag import LightRAG, QueryParam

-from lightrag.llm import ollama_model_complete, ollama_embedding

+from lightrag.llm.ollama import ollama_model_complete, ollama_embed

from lightrag.utils import EmbeddingFunc

WORKING_DIR = "./dickens"

@@ -23,7 +23,7 @@ rag = LightRAG(

embedding_func=EmbeddingFunc(

embedding_dim=768,

max_token_size=8192,

- func=lambda texts: ollama_embedding(

+ func=lambda texts: ollama_embed(

texts, embed_model="nomic-embed-text", host="http://localhost:11434"

),

),

diff --git a/examples/lightrag_ollama_gremlin_demo.py b/examples/lightrag_ollama_gremlin_demo.py

index 35ffece8..fa7d4fb5 100644

--- a/examples/lightrag_ollama_gremlin_demo.py

+++ b/examples/lightrag_ollama_gremlin_demo.py

@@ -10,7 +10,7 @@ import os

# logging.basicConfig(format="%(levelname)s:%(message)s", level=logging.WARN)

from lightrag import LightRAG, QueryParam

-from lightrag.llm import ollama_embedding, ollama_model_complete

+from lightrag.llm.ollama import ollama_embed, ollama_model_complete

from lightrag.utils import EmbeddingFunc

WORKING_DIR = "./dickens_gremlin"

@@ -41,7 +41,7 @@ rag = LightRAG(

embedding_func=EmbeddingFunc(

embedding_dim=768,

max_token_size=8192,

- func=lambda texts: ollama_embedding(

+ func=lambda texts: ollama_embed(

texts, embed_model="nomic-embed-text", host="http://localhost:11434"

),

),

diff --git a/examples/lightrag_ollama_neo4j_milvus_mongo_demo.py b/examples/lightrag_ollama_neo4j_milvus_mongo_demo.py

index 8d26ba65..b71489c7 100644

--- a/examples/lightrag_ollama_neo4j_milvus_mongo_demo.py

+++ b/examples/lightrag_ollama_neo4j_milvus_mongo_demo.py

@@ -1,6 +1,6 @@

import os

from lightrag import LightRAG, QueryParam

-from lightrag.llm import ollama_model_complete, ollama_embed

+from lightrag.llm.ollama import ollama_model_complete, ollama_embed

from lightrag.utils import EmbeddingFunc

# WorkingDir

diff --git a/examples/lightrag_openai_compatible_demo.py b/examples/lightrag_openai_compatible_demo.py

index 3494ae03..09673dd8 100644

--- a/examples/lightrag_openai_compatible_demo.py

+++ b/examples/lightrag_openai_compatible_demo.py

@@ -1,7 +1,7 @@

import os

import asyncio

from lightrag import LightRAG, QueryParam

-from lightrag.llm import openai_complete_if_cache, openai_embedding

+from lightrag.llm.openai import openai_complete_if_cache, openai_embed

from lightrag.utils import EmbeddingFunc

import numpy as np

@@ -26,7 +26,7 @@ async def llm_model_func(

async def embedding_func(texts: list[str]) -> np.ndarray:

- return await openai_embedding(

+ return await openai_embed(

texts,

model="solar-embedding-1-large-query",

api_key=os.getenv("UPSTAGE_API_KEY"),

diff --git a/examples/lightrag_openai_compatible_demo_embedding_cache.py b/examples/lightrag_openai_compatible_demo_embedding_cache.py

index 69106d05..d696ce25 100644

--- a/examples/lightrag_openai_compatible_demo_embedding_cache.py

+++ b/examples/lightrag_openai_compatible_demo_embedding_cache.py

@@ -1,7 +1,7 @@

import os

import asyncio

from lightrag import LightRAG, QueryParam

-from lightrag.llm import openai_complete_if_cache, openai_embedding

+from lightrag.llm.openai import openai_complete_if_cache, openai_embed

from lightrag.utils import EmbeddingFunc

import numpy as np

@@ -26,7 +26,7 @@ async def llm_model_func(

async def embedding_func(texts: list[str]) -> np.ndarray:

- return await openai_embedding(

+ return await openai_embed(

texts,

model="solar-embedding-1-large-query",

api_key=os.getenv("UPSTAGE_API_KEY"),

diff --git a/examples/lightrag_openai_compatible_stream_demo.py b/examples/lightrag_openai_compatible_stream_demo.py

index 9345ada5..93c4297c 100644

--- a/examples/lightrag_openai_compatible_stream_demo.py

+++ b/examples/lightrag_openai_compatible_stream_demo.py

@@ -1,7 +1,7 @@

import os

import inspect

from lightrag import LightRAG

-from lightrag.llm import openai_complete, openai_embedding

+from lightrag.llm import openai_complete, openai_embed

from lightrag.utils import EmbeddingFunc

from lightrag.lightrag import always_get_an_event_loop

from lightrag import QueryParam

@@ -24,7 +24,7 @@ rag = LightRAG(

embedding_func=EmbeddingFunc(

embedding_dim=1024,

max_token_size=8192,

- func=lambda texts: openai_embedding(

+ func=lambda texts: openai_embed(

texts=texts,

model="text-embedding-bge-m3",

base_url="http://127.0.0.1:1234/v1",

diff --git a/examples/lightrag_openai_demo.py b/examples/lightrag_openai_demo.py

index 29bc75ca..7a43a710 100644

--- a/examples/lightrag_openai_demo.py

+++ b/examples/lightrag_openai_demo.py

@@ -1,7 +1,7 @@

import os

from lightrag import LightRAG, QueryParam

-from lightrag.llm import gpt_4o_mini_complete

+from lightrag.llm.openai import gpt_4o_mini_complete

WORKING_DIR = "./dickens"

diff --git a/examples/lightrag_openai_neo4j_milvus_redis_demo.py b/examples/lightrag_openai_neo4j_milvus_redis_demo.py

index 3de1a657..75e110aa 100644

--- a/examples/lightrag_openai_neo4j_milvus_redis_demo.py

+++ b/examples/lightrag_openai_neo4j_milvus_redis_demo.py

@@ -1,6 +1,6 @@

import os

from lightrag import LightRAG, QueryParam

-from lightrag.llm import ollama_embed, openai_complete_if_cache

+from lightrag.llm.ollama import ollama_embed, openai_complete_if_cache

from lightrag.utils import EmbeddingFunc

# WorkingDir

diff --git a/examples/lightrag_oracle_demo.py b/examples/lightrag_oracle_demo.py

index 6de6e0a7..47020fd6 100644

--- a/examples/lightrag_oracle_demo.py

+++ b/examples/lightrag_oracle_demo.py

@@ -3,7 +3,7 @@ import os

from pathlib import Path

import asyncio

from lightrag import LightRAG, QueryParam

-from lightrag.llm import openai_complete_if_cache, openai_embedding

+from lightrag.llm.openai import openai_complete_if_cache, openai_embed

from lightrag.utils import EmbeddingFunc

import numpy as np

from lightrag.kg.oracle_impl import OracleDB

@@ -42,7 +42,7 @@ async def llm_model_func(

async def embedding_func(texts: list[str]) -> np.ndarray:

- return await openai_embedding(

+ return await openai_embed(

texts,

model=EMBEDMODEL,

api_key=APIKEY,

diff --git a/examples/lightrag_siliconcloud_demo.py b/examples/lightrag_siliconcloud_demo.py

index ca15ccd0..5f4f86a1 100644

--- a/examples/lightrag_siliconcloud_demo.py

+++ b/examples/lightrag_siliconcloud_demo.py

@@ -1,7 +1,8 @@

import os

import asyncio

from lightrag import LightRAG, QueryParam

-from lightrag.llm import openai_complete_if_cache, siliconcloud_embedding

+from lightrag.llm.openai import openai_complete_if_cache

+from lightrag.llm.siliconcloud import siliconcloud_embedding

from lightrag.utils import EmbeddingFunc

import numpy as np

diff --git a/examples/lightrag_zhipu_demo.py b/examples/lightrag_zhipu_demo.py

index 0924656d..97a5042e 100644

--- a/examples/lightrag_zhipu_demo.py

+++ b/examples/lightrag_zhipu_demo.py

@@ -3,7 +3,7 @@ import logging

from lightrag import LightRAG, QueryParam

-from lightrag.llm import zhipu_complete, zhipu_embedding

+from lightrag.llm.zhipu import zhipu_complete, zhipu_embedding

from lightrag.utils import EmbeddingFunc

WORKING_DIR = "./dickens"

diff --git a/examples/lightrag_zhipu_postgres_demo.py b/examples/lightrag_zhipu_postgres_demo.py

index d0461d84..4ed88602 100644

--- a/examples/lightrag_zhipu_postgres_demo.py

+++ b/examples/lightrag_zhipu_postgres_demo.py

@@ -6,7 +6,7 @@ from dotenv import load_dotenv

from lightrag import LightRAG, QueryParam

from lightrag.kg.postgres_impl import PostgreSQLDB

-from lightrag.llm import ollama_embedding, zhipu_complete

+from lightrag.llm.zhipu import ollama_embedding, zhipu_complete

from lightrag.utils import EmbeddingFunc

load_dotenv()

diff --git a/examples/test.py b/examples/test.py

index 80bcaa6d..67ee22eb 100644

--- a/examples/test.py

+++ b/examples/test.py

@@ -1,6 +1,6 @@

import os

from lightrag import LightRAG, QueryParam

-from lightrag.llm import gpt_4o_mini_complete

+from lightrag.llm.openai import gpt_4o_mini_complete

#########

# Uncomment the below two lines if running in a jupyter notebook to handle the async nature of rag.insert()

# import nest_asyncio

diff --git a/examples/test_chromadb.py b/examples/test_chromadb.py

index df721bb2..0e6361ed 100644

--- a/examples/test_chromadb.py

+++ b/examples/test_chromadb.py

@@ -1,7 +1,7 @@

import os

import asyncio

from lightrag import LightRAG, QueryParam

-from lightrag.llm import gpt_4o_mini_complete, openai_embedding

+from lightrag.llm.openai import gpt_4o_mini_complete, openai_embed

from lightrag.utils import EmbeddingFunc

import numpy as np

@@ -35,7 +35,7 @@ EMBEDDING_MAX_TOKEN_SIZE = int(os.environ.get("EMBEDDING_MAX_TOKEN_SIZE", 8192))

async def embedding_func(texts: list[str]) -> np.ndarray:

- return await openai_embedding(

+ return await openai_embed(

texts,

model=EMBEDDING_MODEL,

)

diff --git a/examples/test_neo4j.py b/examples/test_neo4j.py

index 0048fc17..ac5f7fb7 100644

--- a/examples/test_neo4j.py

+++ b/examples/test_neo4j.py

@@ -1,6 +1,6 @@

import os

from lightrag import LightRAG, QueryParam

-from lightrag.llm import gpt_4o_mini_complete

+from lightrag.llm.openai import gpt_4o_mini_complete

#########

diff --git a/examples/test_split_by_character.ipynb b/examples/test_split_by_character.ipynb

index e8e08b92..df5d938d 100644

--- a/examples/test_split_by_character.ipynb

+++ b/examples/test_split_by_character.ipynb

@@ -16,7 +16,7 @@

"import logging\n",

"import numpy as np\n",

"from lightrag import LightRAG, QueryParam\n",

- "from lightrag.llm import openai_complete_if_cache, openai_embedding\n",

+ "from lightrag.llm.openai import openai_complete_if_cache, openai_embed\n",

"from lightrag.utils import EmbeddingFunc\n",

"import nest_asyncio"

]

@@ -74,7 +74,7 @@

"\n",

"\n",

"async def embedding_func(texts: list[str]) -> np.ndarray:\n",

- " return await openai_embedding(\n",

+ " return await openai_embed(\n",

" texts,\n",

" model=\"ep-20241231173413-pgjmk\",\n",

" api_key=API,\n",

@@ -138,7 +138,7 @@

"\n",

"\n",

"async def embedding_func(texts: list[str]) -> np.ndarray:\n",

- " return await openai_embedding(\n",

+ " return await openai_embed(\n",

" texts,\n",

" model=\"ep-20241231173413-pgjmk\",\n",

" api_key=API,\n",

diff --git a/examples/vram_management_demo.py b/examples/vram_management_demo.py

index c173b913..b8d0872e 100644

--- a/examples/vram_management_demo.py

+++ b/examples/vram_management_demo.py

@@ -1,7 +1,7 @@

import os

import time

from lightrag import LightRAG, QueryParam

-from lightrag.llm import ollama_model_complete, ollama_embedding

+from lightrag.llm.ollama import ollama_model_complete, ollama_embed

from lightrag.utils import EmbeddingFunc

# Working directory and the directory path for text files

@@ -20,7 +20,7 @@ rag = LightRAG(

embedding_func=EmbeddingFunc(

embedding_dim=768,

max_token_size=8192,

- func=lambda texts: ollama_embedding(texts, embed_model="nomic-embed-text"),

+ func=lambda texts: ollama_embed(texts, embed_model="nomic-embed-text"),

),

)

diff --git a/lightrag/api/lightrag_server.py b/lightrag/api/lightrag_server.py

index e5f68d72..87a72a98 100644

--- a/lightrag/api/lightrag_server.py

+++ b/lightrag/api/lightrag_server.py

@@ -8,10 +8,6 @@ import time

import re

from typing import List, Dict, Any, Optional, Union

from lightrag import LightRAG, QueryParam

-from lightrag.llm import lollms_model_complete, lollms_embed

-from lightrag.llm import ollama_model_complete, ollama_embed

-from lightrag.llm import openai_complete_if_cache, openai_embedding

-from lightrag.llm import azure_openai_complete_if_cache, azure_openai_embedding

from lightrag.api import __api_version__

from lightrag.utils import EmbeddingFunc

@@ -468,13 +464,12 @@ def parse_args() -> argparse.Namespace:

help="Path to SSL private key file (required if --ssl is enabled)",

)

parser.add_argument(

- '--auto-scan-at-startup',

- action='store_true',

+ "--auto-scan-at-startup",

+ action="store_true",

default=False,

- help='Enable automatic scanning when the program starts'

+ help="Enable automatic scanning when the program starts",

)

-

args = parser.parse_args()

return args

@@ -679,18 +674,21 @@ def create_app(args):

async def lifespan(app: FastAPI):

"""Lifespan context manager for startup and shutdown events"""

# Startup logic

- try:

- new_files = doc_manager.scan_directory()

- for file_path in new_files:

- try:

- await index_file(file_path)

- except Exception as e:

- trace_exception(e)

- logging.error(f"Error indexing file {file_path}: {str(e)}")

+ if args.auto_scan_at_startup:

+ try:

+ new_files = doc_manager.scan_directory()

+ for file_path in new_files:

+ try:

+ await index_file(file_path)

+ except Exception as e:

+ trace_exception(e)

+ logging.error(f"Error indexing file {file_path}: {str(e)}")

- logging.info(f"Indexed {len(new_files)} documents from {args.input_dir}")

- except Exception as e:

- logging.error(f"Error during startup indexing: {str(e)}")

+ ASCIIColors.info(

+ f"Indexed {len(new_files)} documents from {args.input_dir}"

+ )

+ except Exception as e:

+ logging.error(f"Error during startup indexing: {str(e)}")

yield

# Cleanup logic (if needed)

pass

@@ -721,6 +719,20 @@ def create_app(args):

# Create working directory if it doesn't exist

Path(args.working_dir).mkdir(parents=True, exist_ok=True)

+ if args.llm_binding_host == "lollms" or args.embedding_binding == "lollms":

+ from lightrag.llm.lollms import lollms_model_complete, lollms_embed

+ if args.llm_binding_host == "ollama" or args.embedding_binding == "ollama":

+ from lightrag.llm.ollama import ollama_model_complete, ollama_embed

+ if args.llm_binding_host == "openai" or args.embedding_binding == "openai":

+ from lightrag.llm.openai import openai_complete_if_cache, openai_embed

+ if (

+ args.llm_binding_host == "azure_openai"

+ or args.embedding_binding == "azure_openai"

+ ):

+ from lightrag.llm.azure_openai import (

+ azure_openai_complete_if_cache,

+ azure_openai_embed,

+ )

async def openai_alike_model_complete(

prompt,

@@ -774,13 +786,13 @@ def create_app(args):

api_key=args.embedding_binding_api_key,

)

if args.embedding_binding == "ollama"

- else azure_openai_embedding(

+ else azure_openai_embed(

texts,

model=args.embedding_model, # no host is used for openai,

api_key=args.embedding_binding_api_key,

)

if args.embedding_binding == "azure_openai"

- else openai_embedding(

+ else openai_embed(

texts,

model=args.embedding_model, # no host is used for openai,

api_key=args.embedding_binding_api_key,

@@ -907,42 +919,21 @@ def create_app(args):

else:

logging.warning(f"No content extracted from file: {file_path}")

- @asynccontextmanager

- async def lifespan(app: FastAPI):

- """Lifespan context manager for startup and shutdown events"""

- # Startup logic

- # Now only if this option is active, we can scan. This is better for big databases where there are hundreds of

- # files. Makes the startup faster

- if args.auto_scan_at_startup:

- ASCIIColors.info("Auto scan is active, rescanning the input directory.")

- try:

- new_files = doc_manager.scan_directory()

- for file_path in new_files:

- try:

- await index_file(file_path)

- except Exception as e:

- trace_exception(e)

- logging.error(f"Error indexing file {file_path}: {str(e)}")

-

- logging.info(f"Indexed {len(new_files)} documents from {args.input_dir}")

- except Exception as e:

- logging.error(f"Error during startup indexing: {str(e)}")

-

@app.post("/documents/scan", dependencies=[Depends(optional_api_key)])

async def scan_for_new_documents():

"""

Manually trigger scanning for new documents in the directory managed by `doc_manager`.

-

+

This endpoint facilitates manual initiation of a document scan to identify and index new files.

It processes all newly detected files, attempts indexing each file, logs any errors that occur,

and returns a summary of the operation.

-

+

Returns:

dict: A dictionary containing:

- "status" (str): Indicates success or failure of the scanning process.

- "indexed_count" (int): The number of successfully indexed documents.

- "total_documents" (int): Total number of documents that have been indexed so far.

-

+

Raises:

HTTPException: If an error occurs during the document scanning process, a 500 status

code is returned with details about the exception.

@@ -970,25 +961,25 @@ def create_app(args):

async def upload_to_input_dir(file: UploadFile = File(...)):

"""

Endpoint for uploading a file to the input directory and indexing it.

-

- This API endpoint accepts a file through an HTTP POST request, checks if the

+

+ This API endpoint accepts a file through an HTTP POST request, checks if the

uploaded file is of a supported type, saves it in the specified input directory,

indexes it for retrieval, and returns a success status with relevant details.

-

+

Parameters:

file (UploadFile): The file to be uploaded. It must have an allowed extension as per

`doc_manager.supported_extensions`.

-

+

Returns:

- dict: A dictionary containing the upload status ("success"),

- a message detailing the operation result, and

+ dict: A dictionary containing the upload status ("success"),

+ a message detailing the operation result, and

the total number of indexed documents.

-

+

Raises:

HTTPException: If the file type is not supported, it raises a 400 Bad Request error.

If any other exception occurs during the file handling or indexing,

it raises a 500 Internal Server Error with details about the exception.

- """

+ """

try:

if not doc_manager.is_supported_file(file.filename):

raise HTTPException(

@@ -1017,23 +1008,23 @@ def create_app(args):

async def query_text(request: QueryRequest):

"""

Handle a POST request at the /query endpoint to process user queries using RAG capabilities.

-

+

Parameters:

request (QueryRequest): A Pydantic model containing the following fields:

- query (str): The text of the user's query.

- mode (ModeEnum): Optional. Specifies the mode of retrieval augmentation.

- stream (bool): Optional. Determines if the response should be streamed.

- only_need_context (bool): Optional. If true, returns only the context without further processing.

-

+

Returns:

- QueryResponse: A Pydantic model containing the result of the query processing.

+ QueryResponse: A Pydantic model containing the result of the query processing.

If a string is returned (e.g., cache hit), it's directly returned.

Otherwise, an async generator may be used to build the response.

-

+

Raises:

HTTPException: Raised when an error occurs during the request handling process,

with status code 500 and detail containing the exception message.

- """

+ """

try:

response = await rag.aquery(

request.query,

@@ -1074,7 +1065,7 @@ def create_app(args):

Returns:

StreamingResponse: A streaming response containing the RAG query results.

- """

+ """

try:

response = await rag.aquery( # Use aquery instead of query, and add await

request.query,

@@ -1134,7 +1125,7 @@ def create_app(args):

Returns:

InsertResponse: A response object containing the status of the operation, a message, and the number of documents inserted.

- """

+ """

try:

await rag.ainsert(request.text)

return InsertResponse(

@@ -1759,7 +1750,7 @@ def create_app(args):

"status": "healthy",

"working_directory": str(args.working_dir),

"input_directory": str(args.input_dir),

- "indexed_files": len(doc_manager.indexed_files),

+ "indexed_files": doc_manager.indexed_files,

"configuration": {

# LLM configuration binding/host address (if applicable)/model (if applicable)

"llm_binding": args.llm_binding,

@@ -1772,7 +1763,7 @@ def create_app(args):

"max_tokens": args.max_tokens,

},

}

-

+

# Serve the static files

static_dir = Path(__file__).parent / "static"

static_dir.mkdir(exist_ok=True)

@@ -1780,13 +1771,13 @@ def create_app(args):

return app

-

+

def main():

args = parse_args()

import uvicorn

app = create_app(args)

- display_splash_screen(args)

+ display_splash_screen(args)

uvicorn_config = {

"app": app,

"host": args.host,

diff --git a/lightrag/api/requirements.txt b/lightrag/api/requirements.txt

index 74776828..fc5afd58 100644

--- a/lightrag/api/requirements.txt

+++ b/lightrag/api/requirements.txt

@@ -1,4 +1,3 @@

-aioboto3

ascii_colors

fastapi

nano_vectordb

diff --git a/lightrag/api/static/index.html b/lightrag/api/static/index.html

index 66dc37bb..36690ec9 100644

--- a/lightrag/api/static/index.html

+++ b/lightrag/api/static/index.html

@@ -11,7 +11,7 @@

-

+

|

+ |

++ + | +

+This repository hosts the code of LightRAG. The structure of this code is based on nano-graphrag.

+

+ +

+

+

+

+

+